Run Your Own AI Image Generation

Generating AI Images on a Mac with Stable Diffusion

Right now all of the rage in AI is LLM (Large Language Models) like GPT, PaLM, and Claude. These models generally take text as an input and generate text as an output, and have proven to be quite capable, with GPT-4 passing the Bar exam quite easily.

While it’s hard to dispute the importance of LLMs, I think a more interesting place to dip your toes into AI is with image generation. It’s just more fun to think up a prompt and see a visual manifestation as a result. Some of the state of the art models for image generation include DALL-E, Midjourney and Stable Diffusion.

While the results of all of these are impressive, what sets stable diffusion apart is that the model code and weights have been released publicly, and you can run it yourself instead of relying on cloud services, and it’s really easy!

Setting up a python environment

The first step in running any Machine Learning code is almost always to setup a python environment for execution. This will be opinionated, and of course you can use other tools and strategies, but what I describe will hopefully be easily reproducible.

Install asdf

Follow the directions here to install asdf, a tool which will help to install and manage different versions of python (as well as other tools).

Install python

Navigate to the directory you want to work in and then run these commands:

asdf install python latest

asdf local python latestThis will install the latest version (3.11.3 at posting), and then save it to a local file .tool-versions so that now whenever you are in that directory, you will automatically have that version set.

Create a python virtual environment

Next we will create a python virtualenv, which will allow us to install python packages locally without being affected by those installed elsewhere.

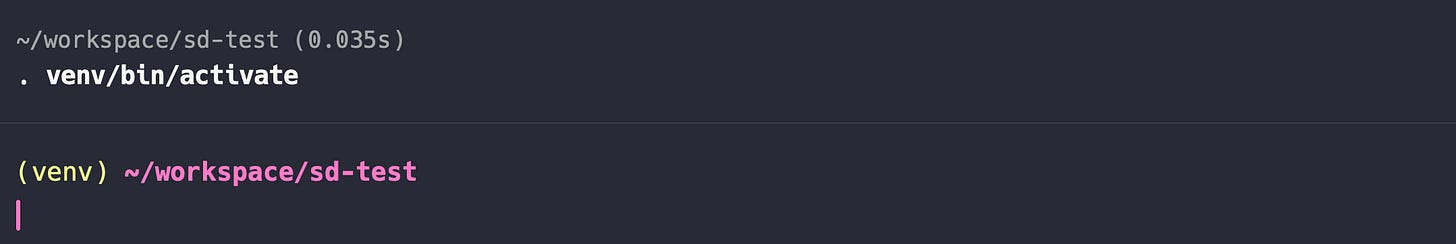

python -m venv venvThen, we will activate the environment with one more command. Many shell prompt setups give a visual indicator of your current python venv.

Choose a model and install python dependencies

The model we are going to use is called stabilityai/stable-diffusion-2-1-base. It is a good place to start because it is quite advanced and capable, but also runs relatively quickly. We can look at the examples section of the model card to see the dependencies that are required:

pip install diffusers transformers accelerate scipy safetensorsCreate and run the program

The code to execute a stable diffusion model is quite short and simple:

# index.py

from diffusers import StableDiffusionPipeline

# set up a stable diffusion pipeline

pipe = StableDiffusionPipeline.from_pretrained("stabilityai/stable-diffusion-2-1-base")

pipe = pipe.to("mps")

prompt = "a photo of an astronaut riding a horse on mars."

image = pipe(prompt).images[0]

image.show() # Display the imageStableDiffusionPipeline.from_pretrained() does all the heavy lifting here for us. According to the docs, we can simply supply the repo id of a model from huggingface.co, and all of the configuration to run it is automatically loaded.

The other very important bit here is this line:

pipe = pipe.to("mps")This configures the underlying math crunchers to use Metal, Apple’s Hardware Acceleration API. In many examples you will see the value “cuda” used instead of “mps”. Without going into more detail, CUDA is what you want when running on Windows or Linux, but on a Mac you will always need to change this or you will run into issues like my last post.

With the above code, change the prompt to whatever you desire, and run python index.py:

You will see some additional output and it will take a bit longer the first time as the code needs to download the model parameters. On my hardware (MBP 2021, M1 PRO, 16GB) it takes about 50 seconds to generate an image with this default config. The image will open automatically when finished and you can choose to save it if you wish.

Success!

I spent a fair amount of time figuring this out, searching issues and going down rabbit holes that ultimately don’t seem to be necessary today. I hope this post helps you to get started running Machine Learning algorithms on your own hardware. Let me know in the comments if you run into any issues, or if you don’t, share your creations!

What a great post!